Emergent Levels of Optimization

This post is a quick introduction to my most recent indpendent research direction in the field of AI alignment.

Introduction

The Emergent Levels of Optimization (ELOO) framework addresses a critical need in alignment research: achieving conceptual clarity across disparate perspectives on AI risk. Like the parable of blind men examining different parts of an elephant, researchers often focus on specific aspects of the alignment problem without a unified understanding of how these elements relate. ELOO provides that holistic view by establishing a rigorous conceptual foundation that connects evolutionary biology, cultural development, and artificial intelligence as manifestations of the same underlying principles.

The ELOO Framework: Foundation and Structure

At the heart of ELOO is a fundamental observation: patterns that persist through time are selected for in our universe. This principle—which we call the Universal Base Optimizer (U)—creates a selection pressure for stable configurations across all physical systems. From this foundation, increasingly sophisticated forms of optimization emerge through natural processes.

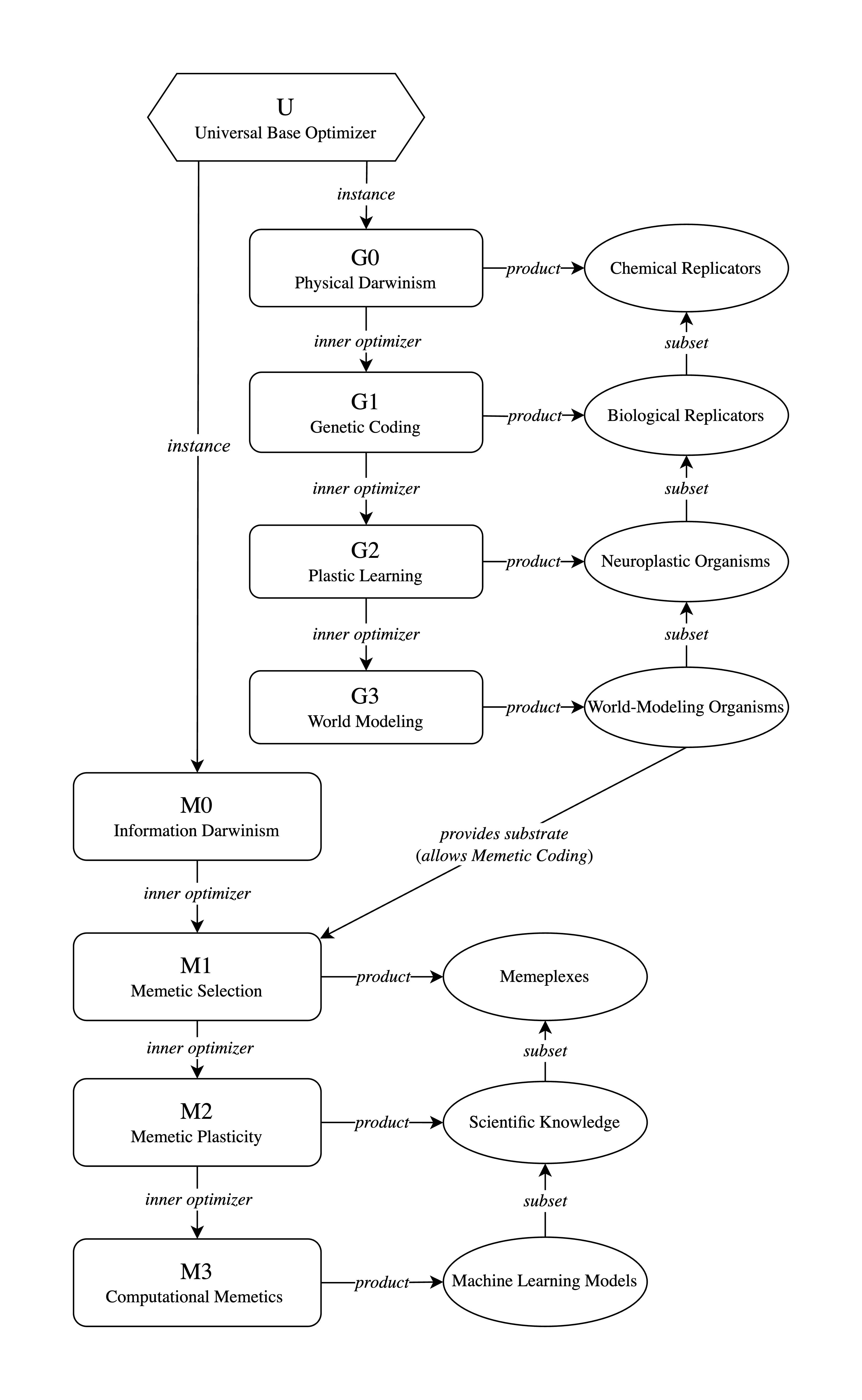

As illustrated in the diagram above, ELOO maps these emergent optimization systems into two parallel lineages:

- The Genetic lineage (G0-G3):

- G0 (Physical Darwinism): Basic chemical replicators emerging from physics

- G1 (Genetic Coding): DNA-based replication enabling stable exploration of traits

- G2 (Plastic Learning): Neural networks enabling learning within lifetimes

- G3 (World Modeling): Complex organisms with explicit world models (humans)

- The Memetic lineage (M0-M3):

- M0 (Information Darwinism): Social dynamics between world-modeling organisms

- M1 (Memetic Selection): Transmission of ideas and practices between humans

- M2 (Memetic Plasticity): Systematic knowledge-building methods like science

- M3 (Computational Memetics): Artificial systems building toward autonomy

The diagram highlights several critical relationships within this framework. First, notice that each level produces specific products—from chemical replicators at G0 to machine learning models at M3. Second, each level serves as an “inner optimizer” for the next level, creating more sophisticated optimization processes. Finally, observe the crucial connection between G3 and M1: world-modeling organisms (primarily humans) provide the substrate that enables memetic selection to emerge.

Each optimization level emerges when it develops what we call the “capability triad”:

- Generation of variations (creating options)

- Selection among those variations (determining which options work better)

- Accumulation of improvements over time (building on previous successes)

This triad enables open-ended advancement, allowing each level to create increasingly sophisticated patterns that persist through time.

As shown in the diagram, ELOO distinguishes between optimization processes (the rectangular nodes) and their products (the oval nodes). For example, genetic coding (G1) is an optimization process, while biological replicators are its products. This distinction resolves many confusions in alignment discourse by clarifying exactly which entities are being compared when discussing “alignment” relationships.

Key Insights for Alignment Research

With this framework established, ELOO offers several powerful insights that reshape how we understand AI alignment challenges:

Cross-Level Feedback Dynamics: When a new optimization level emerges, it can create what we call “retroflex pressure” on previous levels—changing the selection landscape they operate in. Looking at the diagram, you can see how higher levels can influence lower levels despite the primarily upward flow of optimization. For instance, when cultural evolution (M1) developed agriculture, it created retroflex pressure on genetic evolution (G1) by changing which human traits were advantageous. This led to genetic adaptations like lactose tolerance in populations with dairy farming traditions—a change that humans successfully adapted to because it occurred over thousands of years.

The critical concern with artificial intelligence is that M3 systems might create retroflex pressure on human systems (G3) at speeds that make adaptation impossible. Where humans had millennia to adapt to cultural evolution’s pressure, AI systems might create environmental changes too rapid for meaningful human response.

Reframing Alignment Challenges: ELOO reveals that “alignment” is not a binary property but a relationship between optimization levels. The diagram helps visualize why humans aren’t directly “aligned” with genetic fitness—we’re products of G3 (World Modeling), which is separated from G1 (Genetic Coding) by intermediate optimization levels operating on faster timescales.

Similarly, there’s no inherent reason to expect AI systems (potential products of M3) to naturally align with human goals (products of G3). Just as humans don’t inherently optimize for bacterial reproduction (and often actively prevent it when harmful), advanced AI systems won’t inherently optimize for human welfare without specific design interventions.

Timescale Mismatch: Perhaps the most significant risk factor ELOO identifies is the extreme difference in speed between biological and computational selection processes. Natural selection required millions of years to produce complex adaptations; human learning occurs over years; cultural evolution progresses over decades or centuries. But computational systems can run selection processes in seconds or minutes.

This timescale mismatch creates a qualitative change in how optimization processes operate—potentially moving too quickly for human oversight to remain meaningful. The alignment problem can thus be reformulated as: How do we ensure that when selection processes move to computational timescales, they remain compatible with human values?

Substrate Transition: As depicted in the diagram, note the critical connection between G3 products (world-modeling organisms) and M1 (Memetic Selection). This connection highlights a crucial distinction between previous optimization transitions and the current one. The M1→G3 retroflex pressure was largely beneficial to humans because humans were the substrate of M1—cultural evolution depended on human brains and social interactions to function.

By contrast, as AI systems become more capable, the substrate of optimization is shifting from biological to computational. While current systems still primarily depend on human engineering and oversight, we’re witnessing a gradual reduction in this dependence. This shift undermines the natural alignment that existed in previous transitions, creating unique challenges for ensuring AI systems remain beneficial for humanity.

Practical Applications for Alignment Research

The ELOO framework offers concrete benefits for alignment research:

-

Resolving Conceptual Disagreements: Debates about whether evolution provides evidence for discontinuities in AI capabilities become more productive when we clarify the specific transitions being compared. ELOO shows that the proper comparison isn’t between evolution and gradient descent algorithms, but between the transition from genetic to cultural evolution and the potential transition from human culture to AI systems.

-

Identifying Crucial Interventions: ELOO suggests that maintaining meaningful human participation in selection processes is critical, even as they operate at computational speeds. Approaches like “cyborgism” (augmenting human capabilities rather than replacing them) may help preserve human values during the transition to increasingly computational optimization, though they face challenges in balancing enhancement with constraints that may work against U’s fundamental selection for persistence.

-

Anticipating System-Level Dynamics: By understanding how different optimization levels create retroflex pressure on previous levels, we can better anticipate how AI development might affect human social, economic, and political systems. For instance, ELOO provides a framework for analyzing how computational optimization might create selection pressures that human institutions aren’t designed to handle.

-

Evaluating Alignment Strategies: Different approaches to alignment can be assessed based on how effectively they address the fundamental challenges ELOO identifies. Technical alignment methods focused solely on getting AI systems to follow instructions may miss the broader challenge of managing the transition between optimization substrates.

Conclusion and Further Development

As we approach increasingly powerful AI systems, the conceptual foundations of alignment research become more important than ever. ELOO offers a coherent framework that places current technical approaches in a broader context, identifies potential blind spots in existing strategies, provides a common language for researchers across different subfields, and generates testable predictions about how optimization processes emerge and interact.

The full details of the ELOO framework are available in my forthcoming forum post, which elaborates on these concepts and provides detailed analysis of how they apply to specific alignment challenges. The framework continues to evolve as we refine our understanding of optimization processes and their implications for AI development.

By providing a unified conceptual foundation grounded in fundamental physical dynamics, ELOO aims to transform alignment research from disconnected perspectives into a coherent scientific endeavor with shared concepts, terminology, and priorities—illuminating the entire elephant so we can address AI risks more effectively.